Create Your First AI Chat App with Python and Gemini — Desktop GUI in Minutes

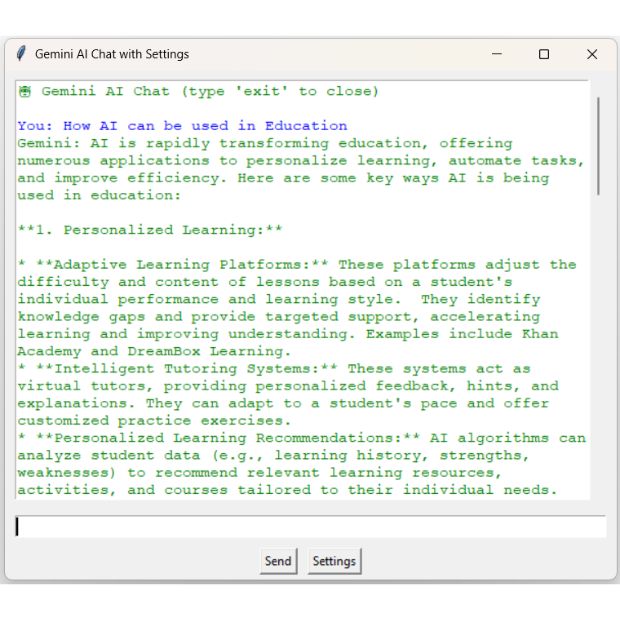

In this first part of our Gemini AI desktop app series, we will build a simple chat interface using Python and Tkinter. This app will let you send messages to the Gemini AI model and get responses — just like a basic chatbot. You will also learn how to configure the Gemini API key and run the app locally.

📦 Step 1: Install Required Packages

pip install google-generativeai python-dotenv🔐 Step 2: Create Your .env File

Create a file named .env in the same folder as your Python script, and add your Gemini API key like this:

GOOGLE_API_KEY=your_actual_api_key_here💬 Step 3: Gemini Chat App (Basic Version)

This is the full working script. It creates a Tkinter GUI with a chat window and input box. The app maintains chat context using Gemini’s start_chat() method.

import tkinter as tk

from tkinter import scrolledtext

import os

import google.generativeai as genai

from dotenv import load_dotenv

# Load API key

load_dotenv()

GOOGLE_API_KEY = os.getenv("GOOGLE_API_KEY")

# Configure Gemini

genai.configure(api_key=GOOGLE_API_KEY)

model = genai.GenerativeModel("gemini-1.5-flash-latest")

chat = model.start_chat()

# Function to send message and get response

def send_message():

user_input = entry.get().strip()

if not user_input:

return

if user_input.lower() in ["exit", "quit"]:

root.destroy()

return

chat_window.insert(tk.END, f"You: {user_input}\n", "user")

entry.delete(0, tk.END)

try:

response = chat.send_message(user_input)

chat_window.insert(tk.END, f"Gemini: {response.text}\n\n", "bot")

chat_window.yview(tk.END)

except Exception as e:

chat_window.insert(tk.END, f"Error: {e}\n", "error")

# Build GUI

root = tk.Tk()

root.title("Gemini AI Chat")

root.geometry("600x500")

chat_window = scrolledtext.ScrolledText(root, wrap=tk.WORD, font=("Courier", 11))

chat_window.pack(padx=10, pady=10, fill=tk.BOTH, expand=True)

chat_window.tag_config("user", foreground="blue")

chat_window.tag_config("bot", foreground="green")

chat_window.tag_config("error", foreground="red")

chat_window.insert(tk.END, "🤖 Gemini AI Chat (type 'exit' to close)\n\n", "bot")

entry = tk.Entry(root, font=("Courier", 12))

entry.pack(padx=10, pady=5, fill=tk.X)

entry.bind("<Return>", lambda event: send_message())

send_btn = tk.Button(root, text="Send", command=send_message)

send_btn.pack(pady=5)

root.mainloop()▶️ Run the App

python gemini_chat.py💬 Save Chat Conversations in Your Gemini Tkinter App

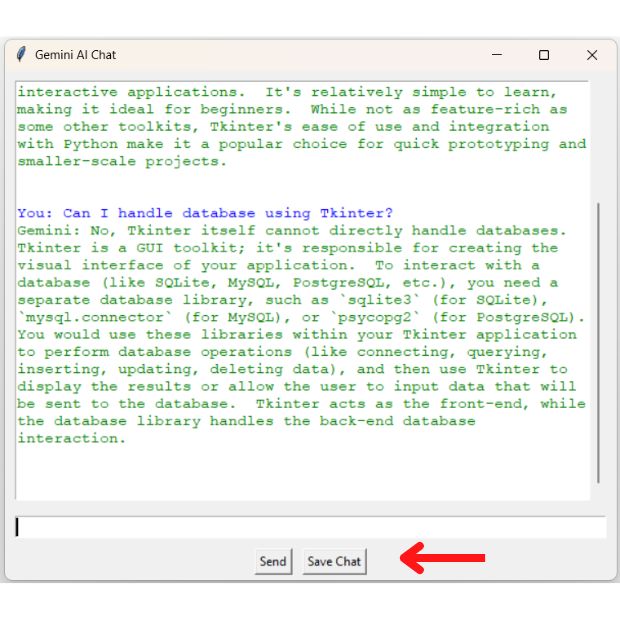

This enhanced Python Tkinter application allows users to interact with Google's Gemini AI and now includes a feature to save the full conversation. By clicking the "Save Chat" button, users can download the discussion as a .txt file directly to their local system. This is useful for preserving responses, notes, or code snippets from the chat. The script uses tkinter.filedialog for file selection and handles all interaction seamlessly within the GUI.

import tkinter as tk

from tkinter import scrolledtext, filedialog

import os

import google.generativeai as genai

from dotenv import load_dotenv

# Load API key from .env file

load_dotenv()

GOOGLE_API_KEY = os.getenv("GOOGLE_API_KEY")

# Configure Gemini API

genai.configure(api_key=GOOGLE_API_KEY)

model = genai.GenerativeModel("gemini-1.5-flash-latest")

chat = model.start_chat()

# Function to send message to Gemini and display response

def send_message():

user_input = entry.get().strip()

if not user_input:

return

if user_input.lower() in ["exit", "quit"]:

root.destroy()

return

chat_window.insert(tk.END, f"You: {user_input}\n", "user")

entry.delete(0, tk.END)

try:

response = chat.send_message(user_input)

chat_window.insert(tk.END, f"Gemini: {response.text}\n\n", "bot")

chat_window.yview(tk.END)

except Exception as e:

chat_window.insert(tk.END, f"Error: {e}\n", "error")

# Function to save the chat content to a local .txt file

def save_chat():

content = chat_window.get("1.0", tk.END).strip()

if not content:

return

file_path = filedialog.asksaveasfilename(

defaultextension=".txt",

filetypes=[("Text files", "*.txt"), ("All files", "*.*")]

)

if file_path:

with open(file_path, "w", encoding="utf-8") as file:

file.write(content)

# Build GUI

root = tk.Tk()

root.title("Gemini AI Chat")

root.geometry("600x500")

chat_window = scrolledtext.ScrolledText(root, wrap=tk.WORD, font=("Courier", 11))

chat_window.pack(padx=10, pady=10, fill=tk.BOTH, expand=True)

chat_window.tag_config("user", foreground="blue")

chat_window.tag_config("bot", foreground="green")

chat_window.tag_config("error", foreground="red")

chat_window.insert(tk.END, "🤖 Gemini AI Chat (type 'exit' to close)\n\n", "bot")

entry = tk.Entry(root, font=("Courier", 12))

entry.pack(padx=10, pady=5, fill=tk.X)

entry.bind("<Return>", lambda event: send_message())

frame = tk.Frame(root)

frame.pack(pady=5)

send_btn = tk.Button(frame, text="Send", command=send_message)

send_btn.pack(side=tk.LEFT, padx=5)

save_btn = tk.Button(frame, text="Save Chat", command=save_chat)

save_btn.pack(side=tk.LEFT, padx=5)

root.mainloop()🚀 What’s Next? Adding Settings

In the next part, we’ll add a Settings option to let users change the model, temperature, and other parameters — making this AI tool more flexible and user-friendly.

import tkinter as tk

from tkinter import scrolledtext, Toplevel, Label, Button, Entry, Scale, StringVar, DoubleVar, OptionMenu

import os

import google.generativeai as genai

from dotenv import load_dotenv

# Load API Key

load_dotenv()

GOOGLE_API_KEY = os.getenv("GOOGLE_API_KEY")

# Function to apply settings

def apply_settings():

global model, chat, chat_temperature

genai.configure(api_key=GOOGLE_API_KEY)

model = genai.GenerativeModel(selected_model.get())

chat = model.start_chat()

chat_temperature = temperature.get()

chat_window.insert(tk.END, f"🛠️ Settings applied: {selected_model.get()}, Temp: {chat_temperature}\n\n", "bot")

# Function to open settings window

def open_settings():

settings_win = Toplevel(root)

settings_win.title("Settings")

settings_win.geometry("300x200")

Label(settings_win, text="Select Model:").pack(pady=5)

OptionMenu(settings_win, selected_model,

"gemini-1.5-flash-latest", "gemini-1.5-pro-latest").pack()

Label(settings_win, text="Temperature:").pack(pady=5)

Scale(settings_win, from_=0.0, to=1.0, resolution=0.1,

orient="horizontal", variable=temperature).pack()

Button(settings_win, text="Apply", command=lambda: [apply_settings(), settings_win.destroy()]).pack(pady=10)

# Function to send message

def send_message():

user_input = entry.get().strip()

if not user_input:

return

if user_input.lower() in ['exit', 'quit']:

root.destroy()

return

chat_window.insert(tk.END, f"You: {user_input}\n", "user")

entry.delete(0, tk.END)

try:

response = chat.send_message(user_input, generation_config={"temperature": chat_temperature})

chat_window.insert(tk.END, f"Gemini: {response.text}\n\n", "bot")

chat_window.yview(tk.END)

except Exception as e:

chat_window.insert(tk.END, f"Error: {e}\n", "error")

# Build GUI

root = tk.Tk()

root.title("Gemini AI Chat with Settings")

root.geometry("600x500")

# Define Tkinter variables AFTER creating root

selected_model = tk.StringVar(value="gemini-1.5-flash-latest")

temperature = tk.DoubleVar(value=0.7)

chat_temperature = temperature.get()

# Initialize model and chat

genai.configure(api_key=GOOGLE_API_KEY)

model = genai.GenerativeModel(selected_model.get())

chat = model.start_chat()

# Chat display

chat_window = scrolledtext.ScrolledText(root, wrap=tk.WORD, font=("Courier", 11))

chat_window.pack(padx=10, pady=10, fill=tk.BOTH, expand=True)

chat_window.tag_config("user", foreground="blue")

chat_window.tag_config("bot", foreground="green")

chat_window.tag_config("error", foreground="red")

chat_window.insert(tk.END, "🤖 Gemini AI Chat (type 'exit' to close)\n\n", "bot")

# Input field

entry = tk.Entry(root, font=("Courier", 12))

entry.pack(padx=10, pady=5, fill=tk.X)

entry.bind("<Return>", lambda event: send_message())

# Button frame

frame = tk.Frame(root)

frame.pack(pady=5)

send_btn = tk.Button(frame, text="Send", command=send_message)

send_btn.pack(side=tk.LEFT, padx=5)

settings_btn = tk.Button(frame, text="Settings", command=open_settings)

settings_btn.pack(side=tk.LEFT, padx=5)

# Start GUI loop

root.mainloop()Frequently Asked Questions (FAQ)

Q1: How do I create a chatbot using Python and Gemini API?

You can use the Tkinter library to build a graphical interface and integrate it with Google's Gemini Generative AI model using the google.generativeai package. It allows users to enter text and receive AI-generated responses directly in the GUI.

Q2: Do I need internet access for this chatbot to work?

Yes, since the chatbot uses Gemini API (a cloud-based service), internet connectivity is essential to send and receive responses.

Q3: Can I extend this chatbot with memory or multi-turn conversations?

Absolutely! You can enable context memory using Gemini’s chat session features, which allow maintaining past inputs and responses for a more natural conversation flow.

Subhendu Mohapatra

Author

🎥 Join me live on YouTubePassionate about coding and teaching, I publish practical tutorials on PHP, Python, JavaScript, SQL, and web development. My goal is to make learning simple, engaging, and project‑oriented with real examples and source code.

Subscribe to our YouTube Channel here

Python Video Tutorials

Python Video Tutorials